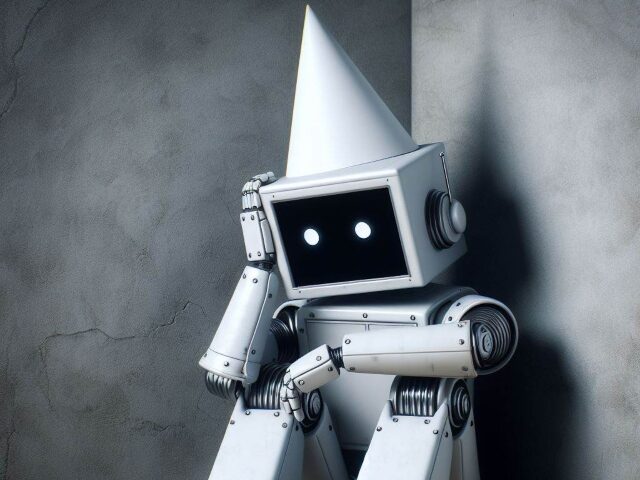

A leading “misinformation expert” has come under fire for citing seemingly nonexistent sources in an affadavit supporting Minnesota’s new law banning some AI-generated deepfakes. Opposing lawyers claim the Stanford professor used AI to write his legal document, which backfired when the system “hallucinated” by generating false references to imaginary academic papers.

Minnesota Reformer reports that Professor Jeff Hancock, the founding director of the Stanford Social Media Lab, is facing accusations of citing fabricated sources in his affidavit supporting Minnesota’s recently enacted legislation that prohibits the use of “deep fake” technology to influence elections. The law, which is being challenged in federal court by a conservative YouTuber and Republican state Rep. Mary Franson on First Amendment grounds, has sparked a debate about the role of AI-generated content in legal matters.

Hancock, who is known for his research on deception and technology, submitted a 12-page expert declaration at the request of Minnesota Attorney General Keith Ellison (D). However, attorneys representing the plaintiffs have discovered that several academic works cited in the declaration appear to be non-existent. For instance, a study titled “The Influence of Deepfake Videos on Political Attitudes and Behavior,” allegedly published in the Journal of Information Technology & Politics in 2023, cannot be found in the journal or any academic databases. The pages referenced in the declaration contain entirely different articles.

The plaintiffs’ attorneys suggest that the citation bears the hallmarks of an “artificial intelligence (AI) ‘hallucination,'” likely generated by a large language model such as ChatGPT. They question how this “hallucination” ended up in Hancock’s declaration and argue that it calls the entire document into question. Libertarian law professor Eugene Volokh also found that another cited study, “Deepfakes and the Illusion of Authenticity: Cognitive Processes Behind Misinformation Acceptance,” does not appear to exist.

The use of AI-generated content in legal proceedings has led to several embarrassing incidents in recent years. In 2023, two New York lawyers were sanctioned by a federal judge for submitting a brief containing citations of non-existent legal cases made up by ChatGPT. While some lawyers involved in previous mishaps have pleaded ignorance about the software’s limitations and tendency to fabricate information, Hancock’s expertise in technology and misinformation makes the fake citations particularly concerning.

Frank Bednarz, an attorney for the plaintiffs, argues that proponents of the deep fake law claim AI-generated content cannot be countered by fact-checks and education, unlike other forms of online speech. However, by calling out the AI-generated fabrication to the court, Bednarz believes they demonstrate that “the best remedy for false speech remains true speech — not censorship.”

Read more at Minnesota Reformer here.

Lucas Nolan is a reporter for Breitbart News covering issues of free speech and online censorship.

COMMENTS

Please let us know if you're having issues with commenting.