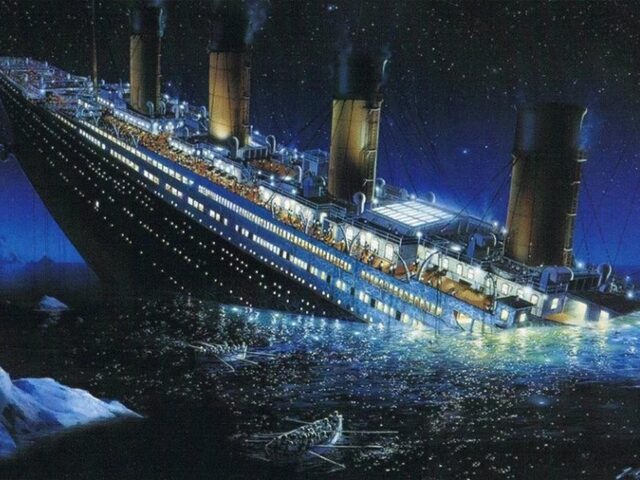

A former OpenAI safety employee has raised concerns about the company’s approach to artificial general intelligence (AGI), likening it to the ill-fated Titanic’s prioritization of speed over safety.

Business Insider reports that William Saunders, who worked for three years as a member of OpenAI’s “superalignment” safety team, has voiced his apprehensions about the company’s trajectory in the field of artificial intelligence. In a recent interview on tech YouTuber Alex Kantrowitz’s podcast, Saunders drew a stark comparison between OpenAI’s current path and that of the White Star Line, the company responsible for building the Titanic.

Saunders, who resigned from OpenAI in February, expressed his growing unease with the company’s dual focus on achieving Artificial General Intelligence (AGI) while simultaneously rolling out paid products. He noted, “They’re on this trajectory to change the world, and yet when they release things, their priorities are more like a product company. And I think that is what is most unsettling.”

The former safety employee’s concerns stem from what he perceives as a shift in priorities within OpenAI. Saunders explained that during his tenure, he often questioned whether the company’s approach was more akin to the meticulous and risk-aware Apollo space program or the ill-fated Titanic. As time progressed, he felt that OpenAI’s leadership was increasingly making decisions that prioritized “getting out newer, shinier products” over careful risk assessment and mitigation.

Saunders emphasized the need for a more cautious approach, similar to that of the Apollo program, which he characterized as an ambitious project that “was about carefully predicting and assessing risks” while pushing scientific boundaries. He contrasted this with the Titanic, built by White Star Line amid fierce competition to create larger cruise liners, potentially compromising safety for speed and luxury.

The analogy extends beyond mere comparison. Saunders fears that OpenAI might be overly reliant on its current measures and research for AI safety, much like the Titanic’s creators who believed their ship to be unsinkable. “Lots of work went into making the ship safe and building watertight compartments so that they could say that it was unsinkable,” he said. “But at the same time, there weren’t enough lifeboats for everyone. So when disaster struck, a lot of people died.”

In Saunders’ view, a “Titanic disaster” for AI could manifest in various forms, including models capable of launching large-scale cyberattacks, manipulating public opinion en masse, or aiding in the development of biological weapons. To mitigate these risks, he advocates for additional “lifeboats” in the form of delayed releases of new language models to allow for more thorough research into potential harms.

Saunders’ concerns are not isolated. In early June, a group of former and current employees from Google’s DeepMind and OpenAI, including Saunders himself, published an open letter warning that current industry oversight standards were insufficient to safeguard against potential catastrophes for humanity.

The letter calls for a “right to warn about artificial intelligence” and asks for a commitment to four principles around transparency and accountability. These principles include a provision that companies will not force employees to sign non-disparagement agreements that prohibit airing risk-related AI issues and a mechanism for employees to anonymously share concerns with board members.

The employees emphasize the importance of their role in holding AI companies accountable to the public, given the lack of effective government oversight. They argue that broad confidentiality agreements block them from voicing their concerns, except to the very companies that may be failing to address these issues.

Read more at Business Insider here.

Lucas Nolan is a reporter for Breitbart News covering issues of free speech and online censorship.

COMMENTS

Please let us know if you're having issues with commenting.