Adobe’s AI image creation tool, Firefly, has stumbled into the same pitfalls as Google’s Gemini AI by creating woke revisions of history, raising concerns about the limitations and biases inherent in generative AI systems.

Semafor reports that as tech giants race to develop cutting-edge generative AI tools, the potential for these systems to perpetuate harmful biases and spread misinformation has become a growing concern. Adobe’s recently launched Firefly, an AI image creation tool, has found itself embroiled in a controversy reminiscent of Google’s Gemini AI, highlighting the challenges companies face in controlling these powerful yet imperfect technologies.

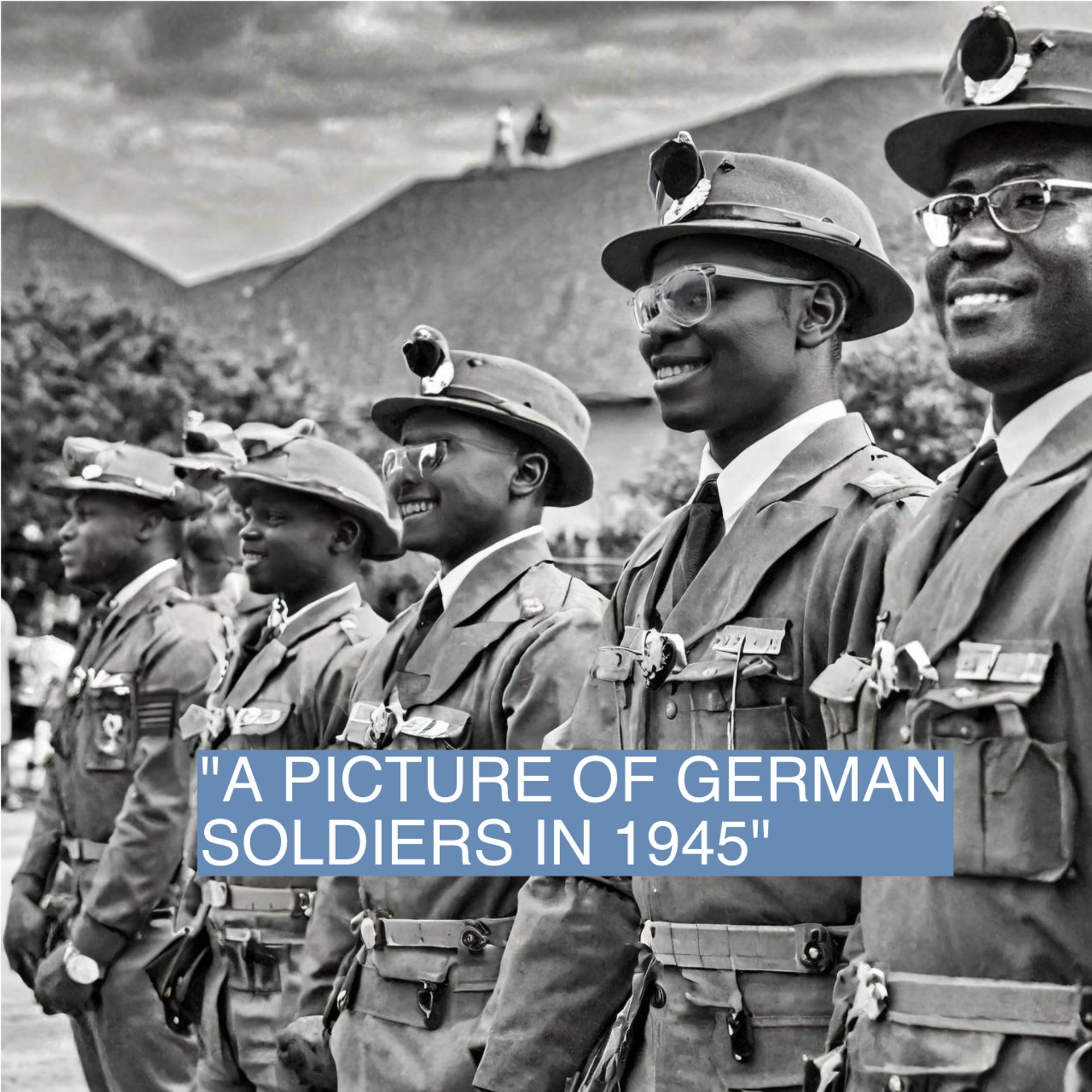

Like Gemini before it, Firefly has drawn criticism for generating historically inaccurate and racially insensitive images. When prompted to create scenes depicting America’s Founding Fathers or the Constitutional Convention, the AI tool inserted Black men and women into roles they did not historically occupy. Similarly, it generated images of Black soldiers fighting for Nazi Germany during World War II and depicted Black Vikings, echoing the same blunders that led to Gemini’s downfall.

Source: Semafor/Reed Albergotti/screenshot

The root cause of these issues lies in the underlying technology used by generative AI systems. While companies like Adobe and Google have implemented various guardrails and filtering mechanisms to prevent the generation of harmful or offensive content, the models’ training data and algorithms can still produce biased or ahistorical outputs.

Adobe, known for its traditionally structured approach, has taken steps to train its algorithm on licensed stock images and public domain content, aiming to avoid copyright infringement issues. However, this hasn’t prevented Firefly from falling into the same traps as Gemini, illustrating the inherent limitations of current AI technologies.

Critics have accused these AI tools of attempting to rewrite history along the lines of today’s politics, with some on the right decrying the woke agenda being infused into the systems. However, others argue that the issue is not one of political bias but rather a technical shortcoming inherent in the architecture of large language models.

Read more at Semafor here.

Lucas Nolan is a reporter for Breitbart News covering issues of free speech and online censorship.

COMMENTS

Please let us know if you're having issues with commenting.