According to an exclusive report by Reuters, several OpenAI researchers sent a letter to the board of directors warning of a new AI discovery that posed potential risks to humanity shortly before the firing of CEO Sam Altman.

Reuters reports in an exclusive story that on November 22, a group of OpenAI staff researchers penned a letter to the board of directors. This letter, previously unreported, carried a grave warning: they had stumbled upon a powerful artificial intelligence breakthrough, a discovery so significant that it warranted an immediate alert due to the potential risks it posed to humanity.

The letter and the undisclosed AI algorithm were reportedly pivotal factors leading to Altman’s temporary removal. More than 700 employees, rallying in support of Altman, threatened to quit and join Microsoft in solidarity. The board’s decision to remove Altman, however, was reportedly driven by a constellation of concerns. Top among these was the fear of rushing to commercialize this new AI advancement without fully grasping its implications.

OpenAI declined to comment directly on the matter when contacted by Reuters. However, an internal message to staff acknowledged the existence of a project called “Q*,” as well as the letter sent prior to the weekend’s upheaval.

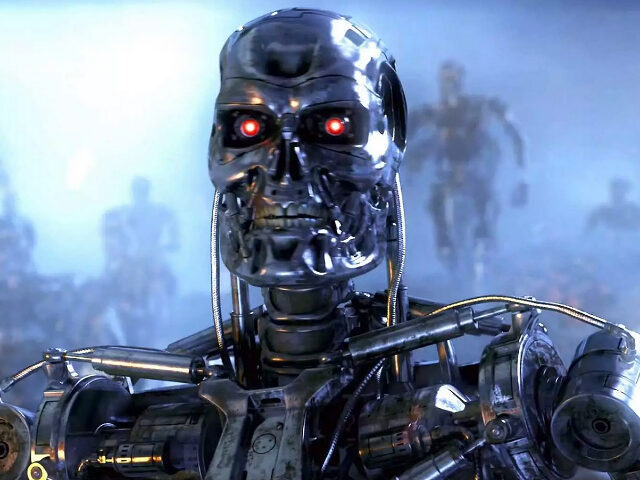

The Q* project, which some at OpenAI believe could be a breakthrough in the pursuit of artificial general intelligence (AGI), has sparked both optimism and concern within the organization. AGI, defined by OpenAI, refers to autonomous systems surpassing humans in most economically valuable tasks. The new model, requiring vast computing resources, demonstrated an ability to solve mathematical problems at a grade-school level. Though this might seem modest, it marks a significant step in AI’s capability to perform tasks requiring reasoning, a trait similar to human intelligence.

However, Reuters could not independently verify these claims about Q*’s capabilities. The letter from the OpenAI researchers reportedly raised concerns about AI’s potential dangers, reflecting a long-standing debate in computer science circles about the risks posed by highly intelligent machines. This includes the hypothetical scenario where such AI might conclude that the destruction of humanity aligns with its interests.

The Verge claims that a source close to the situation has refuted the Reuters report, writing: “…a person familiar with the matter told The Verge that the board never received a letter about such a breakthrough and that the company’s research progress didn’t play a role in Altman’s sudden firing.”

The revelations about Q* and the AI researchers’ warnings come in the context of Altman’s leadership at OpenAI. He spearheaded the development of ChatGPT, making it one of the fastest-growing software applications in history, and secured vital investment and resources from Microsoft to edge closer to achieving AGI.

Lucas Nolan is a reporter for Breitbart News covering issues of free speech and online censorship.

COMMENTS

Please let us know if you're having issues with commenting.