Meredith Whittaker, the president of private messaging app Signal, shed light on the intrinsic link between AI and Big Tech’s surveillance capitalism, emphasizing the profound implications of the technology on privacy and user data during her appearance at TechCrunch Disrupt 2023. Whittaker went so far as to label AI as “surveillance technology,” which may be why the Silicon Valley Masters of the Universe are rushing to integrate AI into everything they do.

TechCrunch reports that Meredith Whittaker, a prominent figure in the tech industry and the president of Signal, delved deep into the intertwining relationship between AI and surveillance at the TechCrunch Disrupt 2023 event, offering insights into the pervasive nature of surveillance technologies in the realm of AI.

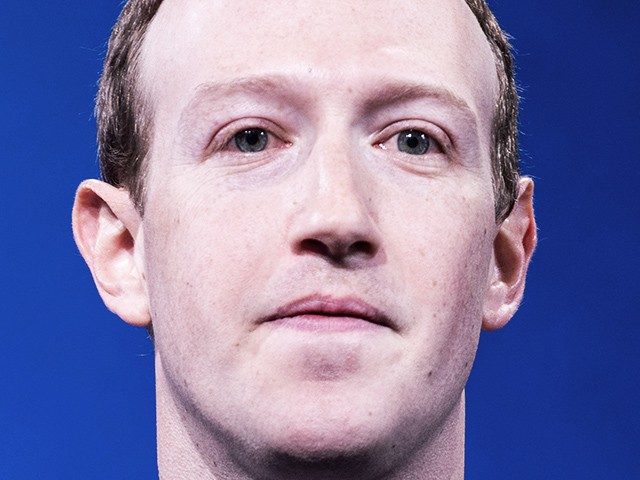

Whittaker’s discussion revolved around her assertion that AI is fundamentally a “surveillance technology.” She added that the essence of AI is deeply rooted in the big data and targeting industry, an area dominated by tech giants like Google and Facebook (now known as Meta). According to Whittaker, the evolution of AI is an extension and intensification of the surveillance capitalism trend that have been in play since the dawn of social media. “AI is a way, I think, to entrench and expand the surveillance business model,” she remarked, highlighting the inseparability of AI from extensive data monetization practices.

The conversation also touched upon the practical applications of AI, such as facial recognition. These technologies, she explained, generate biometric data about individuals that might not even been completely accurate, influencing determinations and predictions that subsequently shape people’s access to resources and opportunities. “These are ultimately surveillance systems that are being marketed to those who have power over us generally: our employers, governments, border control, etc,” Whittaker stated.

However, the development of these sophisticated AI systems is not devoid of human intervention. Whittaker pointed out the paradox of low-paid workers contributing to the assembly process of AI datasets, a necessary step in the development of AI technologies. “It’s thousands and thousands of workers paid very little… and there’s no other way to create these systems, full stop,” she clarified.

Despite the overarching theme of surveillance and exploitation, Whittaker acknowledged the existence of non-exploitative AI applications. She cited an example actually developed by Signal — a small on-device model utilized for a face blur feature, aimed at protecting biometric data.

Read more at TechCrunch here.

Lucas Nolan is a reporter for Breitbart News covering issues of free speech and online censorship.

COMMENTS

Please let us know if you're having issues with commenting.