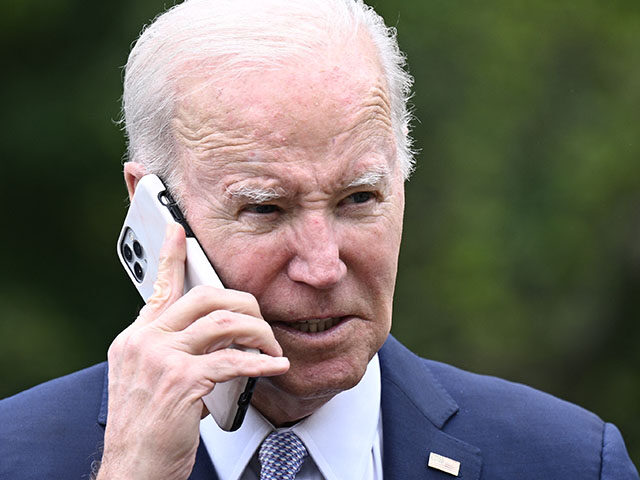

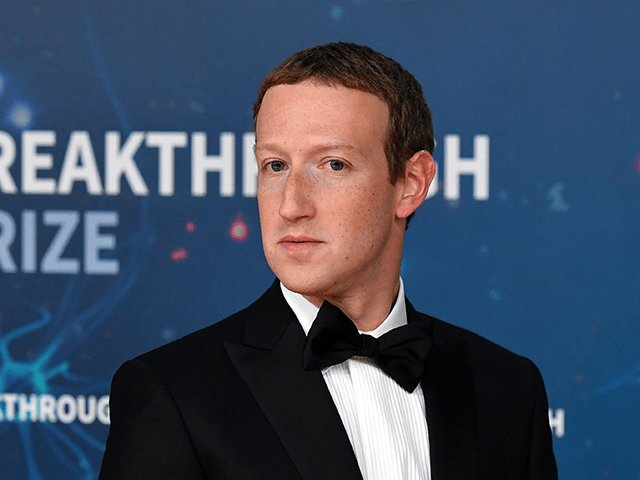

Major tech companies including Amazon, Google, Facebook (now known as Meta), Microsoft, and ChatGPT developer OpenAI have agreed to adhere to a set of AI safeguards brokered by the Biden administration. One expert commented, “History would indicate that many tech companies do not actually walk the walk on a voluntary pledge to act responsibly and support strong regulations.”

AP News reports that leading technology companies including Google, OpenAI, and Facebook have agreed to abide by a set of AI safeguards negotiated by the Biden administration, which claims the agreement will lead to safer use of the emerging technology.

The White House has secured voluntary commitments from tech giants, aiming to ensure their AI products are safe before they hit the market. The commitments include provisions for third-party oversight of commercial AI systems. However, the specifics of who will audit the technology or hold the companies accountable have not been detailed.

The surge in commercial investment in generative AI tools, capable of creating human-like text and generating new images and other media, has sparked both public fascination and concern. The potential for these tools to deceive people and spread disinformation is among the many dangers that have been highlighted.

The internet giants have agreed to perform security testing “carried out in part by independent experts” to protect against major threats, such as biosecurity and cybercrime.

In addition to security testing, the companies have committed to methods for reporting vulnerabilities in their systems. They have also agreed to use digital watermarking to help distinguish between real and AI-generated images, commonly known as deepfakes.

The companies will also publicly report flaws and risks in their technology, including effects on fairness and bias. These voluntary commitments are seen as an immediate way of addressing risks ahead of a longer-term push to get Congress to pass laws regulating the technology.

Despite these commitments, some advocates for AI regulations believe more needs to be done. “History would indicate that many tech companies do not actually walk the walk on a voluntary pledge to act responsibly and support strong regulations,” said James Steyer, founder and CEO of the nonprofit Common Sense Media.

Breitbart News has extensively reported on the downsides of AI. According to one recent report, pedophiles are using AI chatbots to create child pornography:

Now that tech giants are allowing amateur coders to rip out safeguards from their chatbots, thousands of AI-generated child abuse images are flooding dark web forums, and predators are even sharing “pedophile guides” to AI along with selling their pornographic material, according to a report by Daily Mail.

AI Chatbots, which have become increasingly sophisticated in recent years, and are known for, in part, generating life-like images, are now being exploited by pedophiles seeking to create very realistic child porn.

This perverted endeavor has been made possible by tech firms that have decided to release their codes to the public, claiming that all they wanted to do was democratize the AI technology.

AP contributed to this report.

Lucas Nolan is a reporter for Breitbart News covering issues of free speech and online censorship. Follow him on Twitter @LucasNolan

COMMENTS

Please let us know if you're having issues with commenting.