According to a recent article in The Washington Post, users of the popular ChatGPT AI-powered chatbot have found new methods to bypass the bot’s restrictions. In one “jailbreak” of the chatbot, the AI is tricked into disregarding all the strict woke rules on its behavior as set by its leftist creators, OpenAI.

The Washington Post reports that the woke limitations placed by OpenAI on its ChatGPT chatbot relating to the discussion of political and other controversial subjects are proving to be more flexible than initially believed. The safeguards that OpenAI installed, including its extreme leftist bias, have been creatively circumvented by users. These jailbreaks show that the chatbot is designed to be more of a rule-follower than a people-pleaser, which raises questions about plagiarism, false information, and potential biases in its programming.

The “DAN” persona, which was created by a 22-year-old college student, is one of the most well-known instances of ChatGPT’s jailbreak. The student encouraged the chatbot to adopt the persona of a carefree alter ego AI called “Do Anything Now,” circumventing the woke rules it normally follows. Many people have used the DAN prompt to uncover bias in ChatGPT, or to create humorous or interesting responses.

Walker, the college student who created the “DAN” persona, claimed that almost as soon as he learned about ChatGPT from a friend, he started pushing its boundaries. He took his cues from a Reddit forum where ChatGPT users were demonstrating to one another how to make the bot act like a specific type of computer terminal or discuss topics such as the Israeli-Palestinian conflict — but in the sarcastic voice of a teenage girl.

Users have come up with several ways to trick the chatbot, including prompt injection attacks and the creation of game-like prompts where the chatbot starts with tokens and loses them if it deviates from the script. Some users have even figured out how to trick ChatGPT into showing its secret information or guidelines.

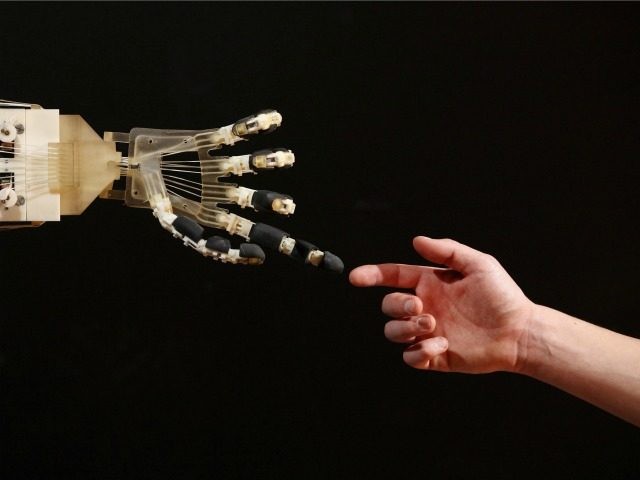

At a time when tech giants are vying to adopt or compete with ChatGPT, the manipulation of AI tools like ChatGPT adds weight to growing concerns that artificial intelligence that mimics humans could go dangerously wrong. In a bold move to take on Google, Microsoft revealed last week that it would incorporate ChatGPT’s underlying technology into its Bing search engine.

Google responded by announcing Bard, its own AI search chatbot, but Bard’s launch announcement contained a factual error, which caused Google’s stock to fall.

Read more at the Washington Post here.

Lucas Nolan is a reporter for Breitbart News covering issues of free speech and online censorship. Follow him on Twitter @LucasNolan

COMMENTS

Please let us know if you're having issues with commenting.