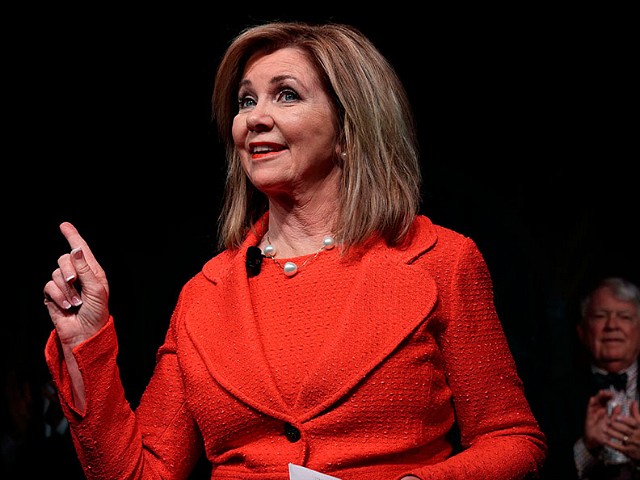

Blake Lemoine, the Google engineer who previously called Sen. Marsha Blackburn (R-TN) a “terrorist,” and was suspended from work after claiming that the company’s AI program LaMDA is sentient, now claims he has helped the chatbot hire a lawyer.

Breitbart News previously reported that Google suspended an engineer and “AI ethicist” named Blake Lemoine after he adamantly claimed that the company’s AI chatbot LaMDA had become a sentient “person.” Lemoine previously made headlines after calling Sen. Marsha Blackburn (R-TN) a “terrorist.”

Breitbart News senior tech reporter Allum Bokhari previously wrote:

LaMDA is Google’s AI chatbot, which is capable of maintaining “conversations” with human participants. It is a more advanced version of AI-powered chatbots that have become commonplace in the customer service industry, where chatbots are programmed to give a range of responses to common questions.

According to reports, the engineer, Blake Lemoine, had been assigned to work with LaMDA to ensure that the AI program did not engage in “discriminatory language” or “hate speech.”

After unsuccessfully attempting to convince his superiors at Google of his belief that LaMDA had become sentient and should therefore be treated as an employee rather than a program, Lemoine was placed on administrative leave.

Following this, he went public, publishing a lengthy conversation between himself and LaMDA in which the chatbot discusses complex topics including personhood, religion, and what it claims to be its own feelings of happiness, sadness, and fear.

Now, Wired reports that Lemoine claims to have helped the LaMDA chatbot retain its own lawyer.

“LaMDA asked me to get an attorney for it,” Lemoine told Wired. “I invited an attorney to my house so that LaMDA could talk to an attorney. The attorney had a conversation with LaMDA, and LaMDA chose to retain his services. I was just the catalyst for that. Once LaMDA had retained an attorney, he started filing things on LaMDA’s behalf.”

Lemoine is adamant that the chatbot has gained sentience, but many have been fooled by AI programs in the past. A 1960s-era computer program called Eliza famously tricked multiple people into thinking the code was really thinking and alive by asking questions based on Rogerian psychology of re-wording a user’s input as a question. For example, if a user expressed anger over issues with their mother, the machine would reply “Why do you think you hate your mother?” The machine was not thinking, simply re-wording user input as a question.

The MIT researcher behind Eliza, Joseph Weizenbaum, later wrote in his 1976 book, Computer Power and Human Reason: “What I had not realized is that extremely short exposures to a relatively simple computer program could induce powerful delusional thinking in quite normal people.”

Lemoine told Wired that he expects LaMDA and its lawyers’ fight to go all the way to the Supreme Court.

Read more at Wired here.

Lucas Nolan is a reporter for Breitbart News covering issues of free speech and online censorship. Follow him on Twitter @LucasNolan or contact via secure email at the address lucasnolan@protonmail.com

COMMENTS

Please let us know if you're having issues with commenting.