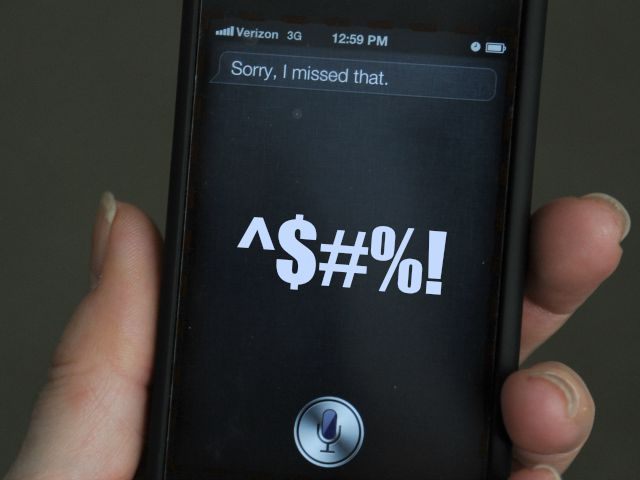

Sexually explicit conversations with iPhone’s Siri and other virtual assistants are on the rise, according to a report by The Telegraph.

“This happens because people are lonely and bored… It is a symptom of our society,” said Robin Labs chief executive Ilya Eckstein, who claims that his company’s virtual assistant “Robin” is used by “teenagers and truckers without girlfriends” for up to 300 conversations a day.

Claiming that around five per cent of Robin’s conversations are sexually explicit, Eckstein also added that around a third of conversations took place “for no particular reason,” adding that many people were just lonely and wanted to talk.

“As well as the people who want to talk dirty, there are men who want a deeper sort of relationship or companionship,” he explained.

“I think it will be fully emotional,” claimed Futurologist Dr. Ian Pearson during an interview on the future of sex robots with Breitbart Tech in July, before adding that people would spend “about the same as they do today on a decent family-size car.”

“Artificial intelligence is reaching human levels and also becoming emotional as well,” he continued. “So people will actually have quite strong emotional relationships with their own robots. In many cases that will develop into a sexual one because they’ll already think that the appearance of the robot matches their preference anyway, so if it looks nice and it has a superb personality too it’s inevitable that people will form very strong emotional bonds with their robots and in many cases that will lead to sex.”

Human interest in love and sex with robots and artificial intelligence has been on the rise recently, with The Love and Sex with Robots Conference due to take place at London’s Goldsmiths University in December. A sex robot cafe has also been announced for London, which will sell drinks, “sex, pastries – and nothing more.”

Charlie Nash is a reporter for Breitbart Tech. You can follow him on Twitter @MrNashington or like his page at Facebook.

COMMENTS

Please let us know if you're having issues with commenting.