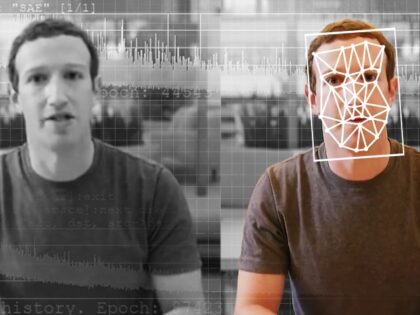

AI Image Generator Midjourney Paywalls Service Following Deepfake Abuse

Midjourney, which along with Stability AI’s Stable Diffusion and Open AI’s DALL-E is one of the leading AI image generating services, has shut down its free edition as it attempts to clamp down on the spread of deepfake images.