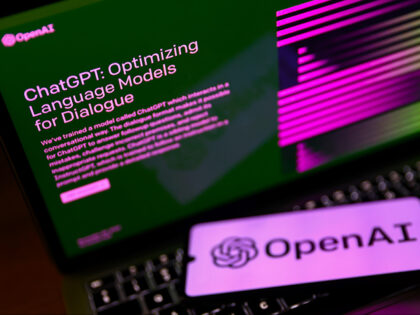

Data Shows Cheating on Homework Is a Primary Use of AI Powerhouse ChatGPT

Data shows that OpenAI’s popular AI chatbot ChatGPT is mainly used for cheating on homework, as its usage fell noticeably during the summer months only to return at Americans returned to school.