Google AI Chatbot Bard Would Flunk the SAT

Google’s Bard AI chatbot, launched as competition for ChatGPT, is reportedly unable to correctly answer SAT questions and has issues with math and writing.

Google’s Bard AI chatbot, launched as competition for ChatGPT, is reportedly unable to correctly answer SAT questions and has issues with math and writing.

A Belgian man reportedly committed suicide after speaking with an AI chatbot called Chai, sparking debate on AI’s impact on mental health. The man’s widow blames the AI Chatbot for his death, claiming it encouraged him to kill himself.

The Center for AI and Digital Policy (CAIDP), an offshoot of the Democrat-aligned Michael Dukakis Institute for Leadership and Innovation, is pressing the Federal Trade Commission (FTC) to put the brakes on OpenAI.

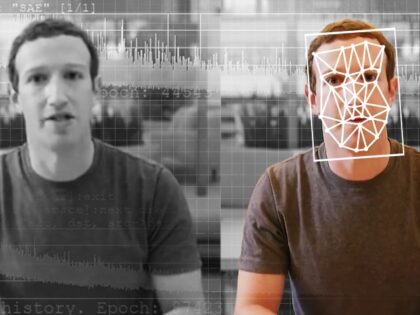

Midjourney, which along with Stability AI’s Stable Diffusion and Open AI’s DALL-E is one of the leading AI image generating services, has shut down its free edition as it attempts to clamp down on the spread of deepfake images.

The AI gold rush in the tech industry continues, representing one of the few growth areas in an industry that is facing cutbacks elsewhere.

A loud voice of doom in the debate over AI has emerged: Eliezer Yudkowsky of the Machine Intelligence Research Institute, who is calling for a total shutdown on the development of AI models more powerful than GPT-4, owing to the possibility that it could kill “every single member of the human species and all biological life on Earth.”

1,000 AI experts, including Tesla and Twitter CEO Elon Musk and Apple co-founder Steve Wozniak, have called for a temporary halt on the advancement of AI technology until safeguards can be put in place.

OpenAI CEO Sam Altman said ChatGPT “will make a lot of jobs just go away” in an interview with Lex Fridman.

Researchers at market-leading AI firm OpenAI and at the University of Pennsylvania are predicting that up to 80 percent of jobs could be impacted by AI technologies, which are rapidly increasing in sophistication.

A recent bug in OpenAI’s ChatGPT AI chatbot allowed users to see each other people’s conversation history, raising concerns about user privacy. OpenAI Sam Altman expressed that the company feels “awful” about the security breach.

As AI chatbots become more popular, concerns about their ability to interpret information and provide accurate facts continue to rise. Different AI products are citing each other and demonstrating an inability to differentiate between satire and serious stories, creating an environment where their responses lack credibility.

Justin Trudeau said artificial intelligence (AI) engineers need “diversity” in order to prevent the development of “evil” algorithms.

Researchers at Stanford University have built an AI that they claim matches the capabilities of OpenAI’s ChatGPT, which currently leads the market in consumer-facing AI products. However, while powerful AIs seem to be easy and cheap to build, running them is a different matter.

A Stanford University professor and AI expert says he is “worried” after the latest iteration of OpenAI’s chatbot, ChatGPT (GPT4), allegedly tried to devise a plan to take over his computer and “escape.” He is concerned that “we are facing a novel threat: AI taking control of people and their computers.”

AI technologies invented by scientists at the University of British Columbia and B.C. Cancer has succeeded in discovering a previously-unknown treatment pathway for an aggressive form of liver cancer, designing a new drug to treat it in the process.

OpenAI CEO Sam Altman is a “little bit scared” of AI — not for the usual, apocalyptic reasons, but for a more leftist concern: its potential to spread “disinformation.”

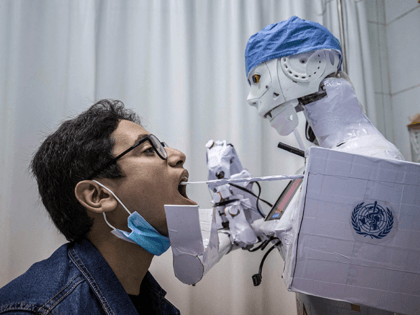

A recent investigation by STAT News found that AI algorithms have influenced how Medicare insurers deny insurance to patients. In some cases, insurers cut off benefits for elderly patients because the AI says they should be better, ignoring what human doctors have to say about the patient’s condition.

PwC has announced a strategic partnership with AI startup Harvey for a 12-month contract to streamline the work of its 4,000 lawyers. The professional services giant claims its AI will not provide legal advice or replace lawyers.

Microsoft’s commitment to AI ethics has been called into question after the software giant laid off a team dedicated to guiding AI innovation in a manner that respects privacy, transparency, and security. The company’s decision to ditch its AI ethics team is especially questionable given its rapid expansion of ChatGPT-powered AI in its software products.

According to a recent study by conservative think tank the Manhattan Institute, the AI language model ChatGPT, developed by OpenAI, has been found to have leftist biases and to be more tolerant of “hate speech” directed at conservatives and men.

As startups race to integrate AI into their products, they are running into a major roadblock: spiraling costs, caused by the immense computing power required to process AI queries.

Microsoft, which is devoting its resources to the AI race, and which has a head start thanks to its bankrolling of ChatGPT creator OpenAI, has reportedly spent a figure “probably larger” than several hundred million dollars to assemble the computing power needed to support the AI company’s projects.

The co-founder of OpenAI, the company behind AI chatbot ChatGPT, recently admitted that the firm “made a mistake” by going woke and that the chatbot’s system “did not reflect the values we intended to be in there,” following accusations of political bias.

Director Steven Spielberg and political activist Noam Chomsky say AI is soulless and terrifying. An article co-authored by Chomsky claims that “ChatGPT exhibits something like the banality of evil: plagiarism and apathy and obviation.”

Chinese internet giant Baidu is scrambling to ready the communist regime’s first AI ChatGPT equivalent, with hundreds of employees working around the clock to get the AI-powered chatbot launched by March 16.

The AI research firm behind the notoriously woke chatbot ChatGPT, OpenAI, is now offering businesses and developers subscriptions to the tool that that they can integrate woke AI into their own apps.

Researchers at Cornell University were able to convert Microsoft’s Bing AI into a scammer that requests compromising information from users, including their name, address, and credit card information.

Louisiana State University (LSU) was forced to issue a statement after the college’s star gymnast, Olivia Dunne, posted a TikTok video promoting the use of Caktus AI, a cheating tool that promises to “automate all of your school work.”

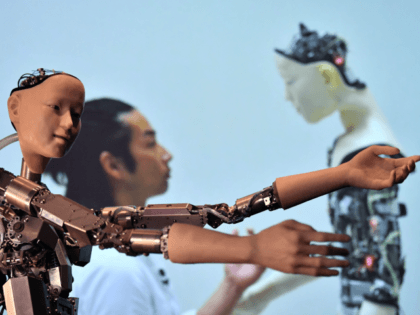

As AI continues to grow in popularity, certain U.S. representatives are calling for further legislation around the technology, but knowledge about AI is weak. Rep. Jay Obernolte (R-CA) explains, “You’d be surprised how much time I spend explaining to my colleagues that the chief dangers of AI will not come from evil robots with red lasers coming out of their eyes.”

Apple blocked an update for an app powered by the AI chatbot ChatGPT, as concerns grow over the harm that could result from AI especially for underage users.

Tesla CEO Elon Musk recently predicted that AI-powered humanoid robots produced by the electric car company will one day outnumber humans. Musk has faced criticism following October’s completely underwhelming introduction of the Optimus robot.

Microsoft’s Bing chatbot has been returning some unhinged and threatening responses to users. The company has now updated the bot with three new modes that aim to fix the issue by allowing users to select how crazy the AI gets.

Microsoft seems to be preparing for the next iteration of the Windows operating system, which will have a strong focus on AI, according to the Verge. While the next version, Windows 12, has not been announced yet, Intel is reportedly planning for the next generation CPUs that will support the new operating system.

Following a frenzy of media stories about the new power of generative AI technologies such as Microsoft-backed ChatGPT and AI image generators like Stable Diffusion, investors are pouring money into the burgeoning industry.

Axel Springer, the international media conglomerate based in Germany, has warned that journalism may be “simply replaced” by AI. The warning came as the company slashed an undisclosed number of jobs, as it aims to improve its annual results by over $100 million in three years.

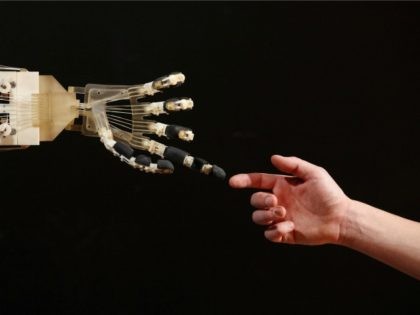

Scientists at John Hopkins University are working on research to enable AI to be constructed using human brain cells, arguing that the use of organic materials is more efficient than traditional computing systems.

Microsoft continues to double down on AI, adding its AI-powered Bing search engine to new Windows computers despite stories about its deranged responses to user inputs.

Tech giant Facebook reportedly has plans to step into the world of AI with the development of “AI personas” for its platforms. Mark Zuckerberg hopes to cash in on the AI craze sparked by ChatGPT, the woke chatbot already notorious for its leftist bias.

According to a recent report, some companies have begun replacing employees with the woke AI tool ChatGPT developed by OpenAI. About half of surveyed executives at companies already using the AI chatbot say they are using the technology in place of human workers for some tasks.

Bank accounts that use voice authentication can be broken into using AI technology, according to a Vice Media writer who hacked into his own bank account using the technique.