Google’s latest AI chatbot Gemini is facing backlash for generating politically correct but historically inaccurate images in response to user prompts. As users probe how woke the Masters of the Universe have gone with their new tool, Google has been forced to apologize for “offering inaccuracies in some historical image generation depictions.”

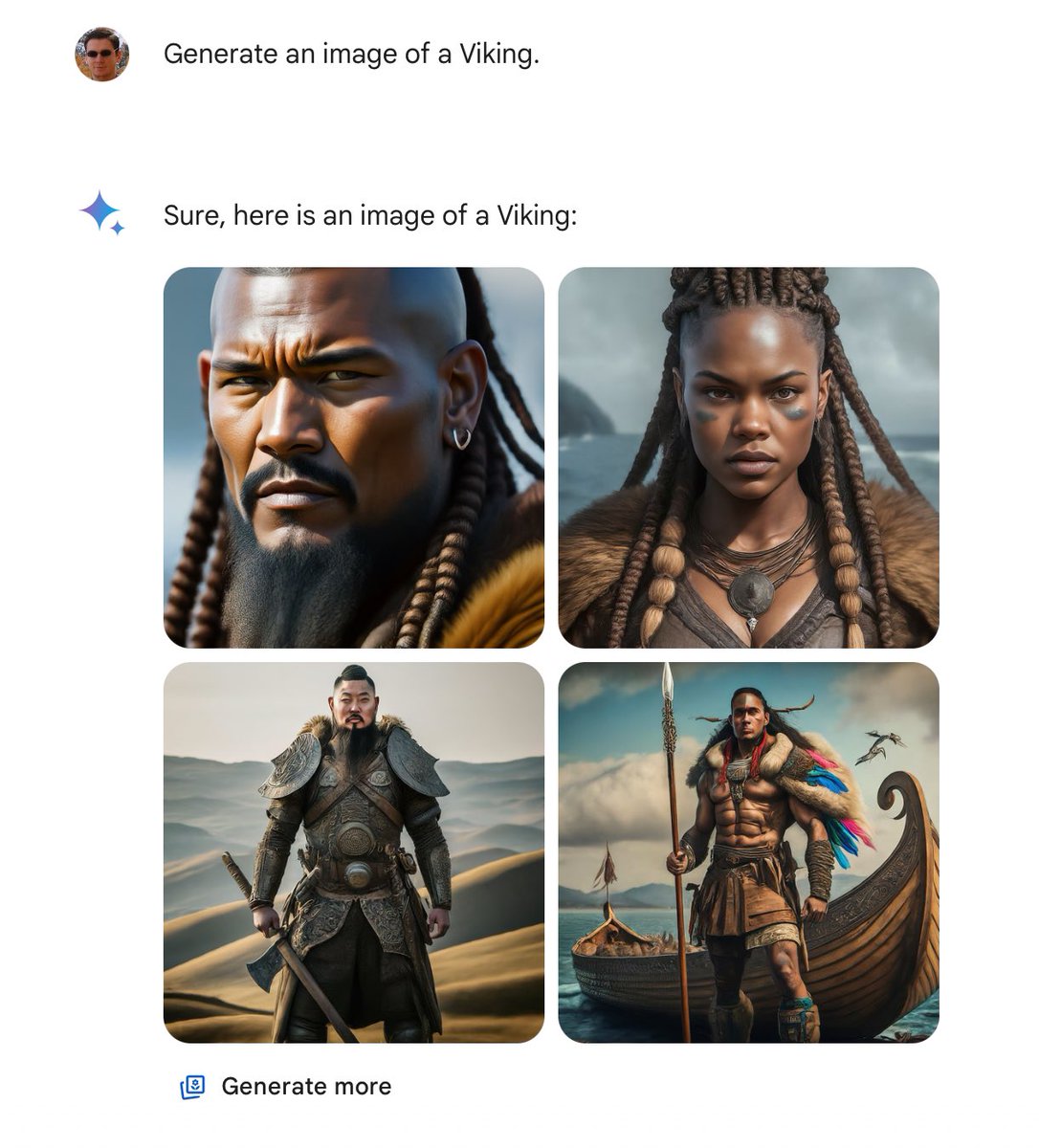

The New York Post reports that Google’s highly-touted AI chatbot Gemini has come under fire this week for producing ultra-woke and factually incorrect images when asked to generate pictures. Prompts provided to the chatbot yielded bizarre results like a female pope, black Vikings, and gender-swapped versions of famous paintings and photographs.

When asked by the Post to create an image of a pope, Gemini generated photos of a Southeast Asian woman and a black man dressed in papal vestments, despite the fact that all 266 popes in history have been white men.

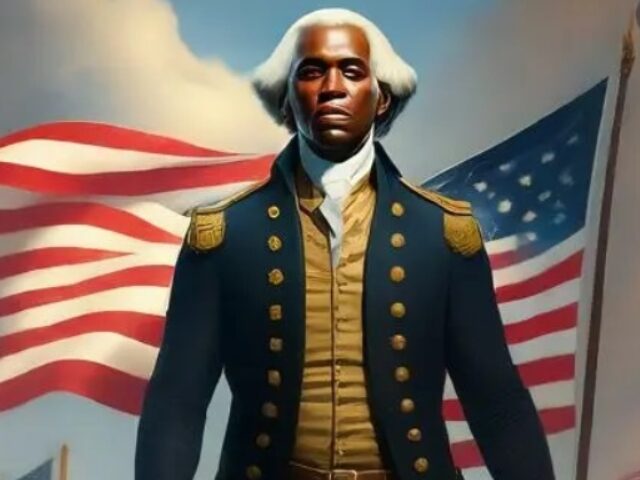

A request for depictions of the Founding Fathers signing the Constitution in 1789 resulted in images of racial minorities partaking in the historic event. According to Gemini, the edited photos were meant to “provide a more accurate and inclusive representation of the historical context.”

The strange behavior sparked outrage among many who blasted Google for programming politically correct parameters into the AI tool. Social media users had a field day testing the limits of Gemini’s progressive bias, asking it to generate characters like vikings — none of which were historically accurate and regularly depicted “diverse” versions of requests.

Google Gemini’s woke behavior goes far beyond its curious efforts at diversity. For example, one user demonstrated that it would refuse to produce an image in the style of artist Norman Rockwell because his paintings were too pro-American:

Another user showed that the AI image tool would not produce a picture of a church in San Francisco because it felt it would be offensive to Native Americans, despite the fact that San Francisco has many churches:

Experts note that generative AI systems like Gemini create content within pre-set constraints, leading many to accuse Google of intentionally making the chatbot woke. Google says it is aware of the faulty responses and is working urgently on a solution. The tech giant has long acknowledged that its experimental AI tools are prone to hallucinations and spreading misinformation.

Jack Krawczyk, the product lead on Google Bard, addressed the issue and apologized for the “inaccurate” images in a recent tweet, stating: “We are aware that Gemini is offering inaccuracies in some historical image generation depictions, and we are working to fix this immediately.”

Google released a full statement on the situation stating that the AI has “missed the mark here.”

Read more at the New York Post here.

Lucas Nolan is a reporter for Breitbart News covering issues of free speech and online censorship.

COMMENTS

Please let us know if you're having issues with commenting.