A new report from Cambridge University warns that rogue states, criminal gangs, and terrorist organizations could use artificial intelligence technology to wreak untold havoc in the very near future.

The report, entitled “The Malicious Use of Artificial Intelligence: Forecasting, Prevention, and Mitigation,” was created with the assistance of top experts from universities, research groups, and think tanks specializing in A.I. research, cybersecurity, and national defense. Cambridge describes it as “a clarion call for governments and corporations worldwide to address the clear and present danger inherent in the myriad applications of A.I.”

“A.I. has many positive applications, but is a dual-use technology and A.I. researchers and engineers should be mindful of and proactive about the potential for its misuse,” the report warns.

The report sees early rumblings of the A.I. threat in current cases of bot programs and viral malware that can far exceed the scope of attacks envisioned by those who deploy them—if indeed they care about the scale of the malware attacks they unleash at all. Advances in artificial intelligence technology will make this type of threat exponentially worse, and frankly, the cybersecurity community is struggling to deal with the threats we face today.

One danger cited in the report is that benevolent A.I. developments, such as self-driving automobiles, will create new opportunities for mischief. The idea of a rogue state or terrorist operation taking control of self-driving vehicles to inflict horrendous damage and loss of life is already becoming a staple of popular culture. (For that matter, the good guys do it in the current box-office smash Black Panther.)

Artificial intelligence systems will inevitably become a point of vulnerability as they deliver more benefits to the public, as has been the case with every stage of the information technology revolution. A.I. can also be directly weaponized.

One general threat outlined by the report is that A.I. can make all sorts of malevolent activities less labor-intensive; a single hacker or terrorist will be able to do the work of ten, or a hundred. A specific near-term threat outlined in the report is that expert systems are already improving the quality of “phishing” scam emails—personalized messages that appear to come from trusted contacts, but are actually designed to trick users into revealing their passwords or install malware on their systems.

In a similar vein, current concerns about botnets spreading “fake news” and manipulating social media could be greatly exacerbated by A.I. technology that makes the bot networks faster, better able to respond to shifting tides of opinion on social media, and perhaps even capable of fabricating convincing “visual evidence” in the form of doctored photos and video clips. Just about everything troublesome in today’s online environment could become much worse when managed by A.I. systems that work much faster than human miscreants and never get tired.

The automation threat extends to the control of physical weapons as well. Terrorists or rogue-state saboteurs could conceivably use commercially available drones as reconnaissance for attacks, or flying bombs, with today’s technology. Tomorrow they will be able to unleash swarms of computer-guided remote vehicles, including very small robots that are difficult for security forces to detect or counteract. The drone weapons terrorism threat is such a clear and present danger that the Cambridge authors seem faintly surprised it hasn’t happened yet, especially given that extremist groups like the Islamic State have been learning to use inexpensive consumer-grade drones to conduct air attacks on Middle Eastern battlefields.

The authors note they are not concerned with far-fetched science-fiction scenarios. The horizon for most of the crime and terrorism dangers outlined in the report is five to ten years. A great deal of the discussion concerns plausible extrapolations of systems currently deployed in the real world or emerging from successful government and corporate prototype tests.

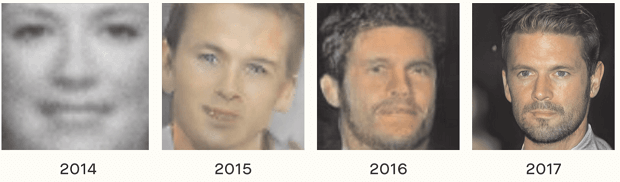

A striking visual illustration of how rapidly A.I. capabilities are evolving is provided by comparing the quality of computer-generated human faces—in other words, a computer system was instructed to rummage through biometric databases and invent the most realistic human it can render:

The Cambridge study invites us to imagine how much worse phishing and identity theft will become when a single hacker can command an A.I. system to imitate the faces, voices, and writing styles of thousands of people in a matter of hours.

At stake is nothing less than the stability of entire societies, as “an ongoing state of low- to medium-level attacks, eroded trust within societies, between societies and their governments, and between governments.” A.I. will improve the quality of security as well, but there are reasons to worry that threats will multiply faster than countermeasures. Top-shelf security can only be developed by the biggest corporate and government actors, but severe threats could fall into the hands of numerous small groups and individual miscreants.

The Cambridge report concludes with some recommendations for developing security standards that can better protect the advanced systems of tomorrow and restrain the dangers of weaponized artificial intelligence, but admits that a consensus does not yet exist on the nature of the threats that will face us in the 2020s, let alone how those dangers can be mitigated without sacrificing the benefits of A.I. or compromising the civil rights of human citizens. We are asking human planners to prepare defenses against threats that will not be carried out by human saboteurs. Ten years further down the road, we may be facing threats that were not conceived by human minds.

COMMENTS

Please let us know if you're having issues with commenting.